Defensa Tesi

Detalls de l'event

- Inici: 13 novembre 2023

- 12:00

- Sala d'actes del Centro de Visión por Computador & Teams

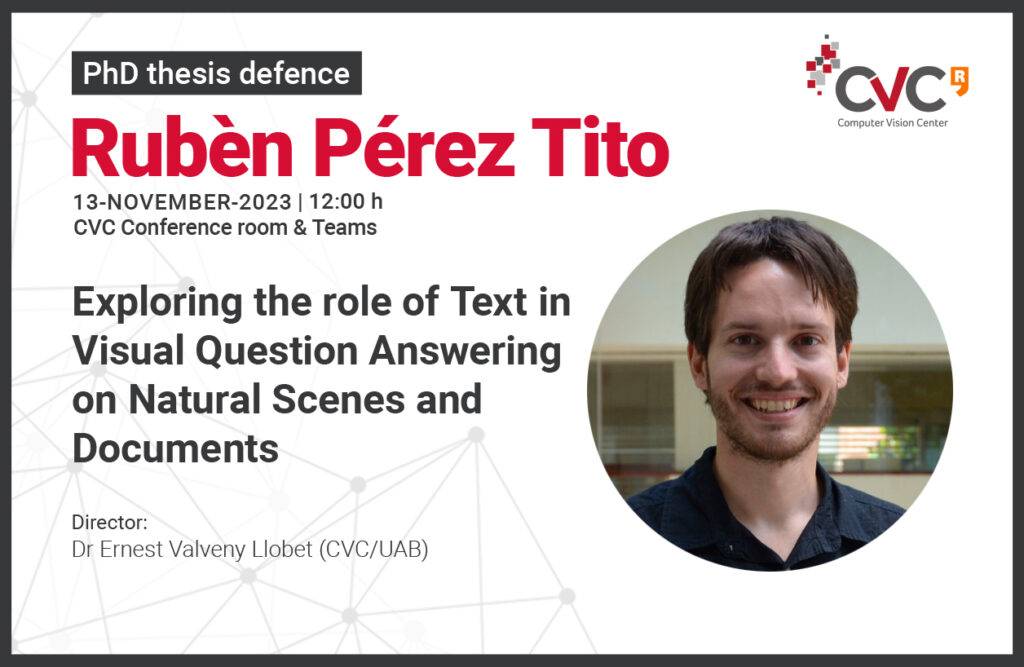

Defensa de la Tesi Doctoral de Rubèn Pérez Tito el proper dia 13 de novembre a les 12:00 h a la Sala d'actes del Centro de Visión por Computador & Teams. La tesi porta el títol "Exploring the role of Text in Visual Question Answering on Natural Scenes and Documents" i està dirigida pel doctor Ernest Valveny Llobet (CVC/UAB).

Abstract

Visual Question Answering (VQA) is the task where given an image and a natural language question, the objective is to generate a natural language answer. At the intersection between computer vision and natural language processing, this task can be seen as a measure of image understanding capabilities, as it requires to reason about objects, actions, colors, positions, the relations between the different elements as well as commonsense reasoning, world knowledge, arithmetic skills and natural language understanding. However, even though the text present in the images conveys important semantically rich information that is explicit and not available in any other form, most VQA methods remained illiterate, largely ignoring the text despite its potential significance. In this thesis, we set out on a journey to bring reading capabilities to computer vision models applied to the VQA task, creating new datasets and methods that can read, reason and integrate the text with other visual cues in natural scene images and documents.

In this thesis, we address the combination of scene text with visual information to fully understand all the nuances of natural scene images. To achieve this objective, we define a new sub-task of VQA that requires reading the text in the image, and highlight the limitations of the current methods. In addition, we propose a new architecture that integrates both modalities and jointly reasons about textual and visual features.

Moreover, we shift the domain of VQA with reading capabilities and apply it to scanned industry document images, providing a high-level end-purpose perspective to Document Understanding, which has been primarily focused on digitizing the document’s contents and extracting key values without considering the ultimate purpose of the extracted information. For this, we create a dataset which requires methods to reason about the unique and challenging elements of documents, such as text, images, tables, graphs and complex layouts, to provide accurate answers in natural language. However, we observed that explicit visual features provide a slight contribution to the overall performance since the main information is usually conveyed within the text and its position. In consequence, we propose VQA on infographic images, seeking for document images with more visually rich elements that require to fully exploit visual information in order to answer the questions. We show the performance gap of different methods when used over industry scanned and infographic images, and propose a new method that integrates the visual features in early stages, which allows the transformer architecture to exploit the visual features during the self-attention operation.

Instead, we apply VQA on a big collection of single-page documents, where the methods must find which documents are relevant to answer the question and provide the answer itself. Finally, mimicking real-world application problems where systems must process documents with multiple pages, we address the multi-page document visual question-answering task. We demonstrate the limitations of existing methods, including models specifically designed to process long sequences. To overcome these limitations, we propose a hierarchical architecture that can process long documents, answer questions, and provide the index of the page where the information to answer the question is located as an explainability measure.

Keywords: Vision and Language, Computer Vision, Pattern Recognition, Deep Learning, Scene Text Visual Question Answering, Document Visual Question Answering, Document Collections, Multipage Documents.