Defensa Tesi

Detalls de l'event

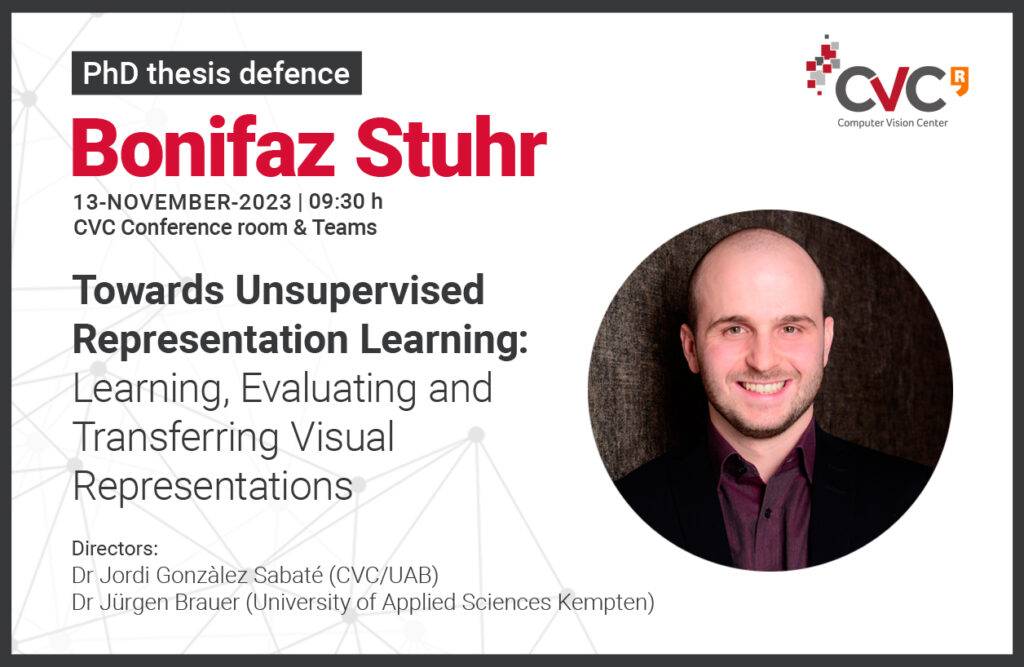

- Inici: 13 novembre 2023

- 9:30

- Sala d'actes del Centro de Visión por Computador & Teams

Defensa de la Tesi Doctoral de Bonifaz Stuhr el proper dia 13 de novembre a les 9:30 h a la Sala d'actes del Centro de Visión por Computador & Teams. La tesi porta el títol "Towards Unsupervised Representation Learning: Learning, Evaluating and Transferring Visual Representations" i està dirigida pels doctors Jordi Gonzàlez Sabaté (CVC/UAB) i Jürgen Brauer (University of Applied Sciences Kempten).

Abstract

Unsupervised representation learning aims at finding methods that learn representations from data without annotation-based signals. Abstaining from annotations not only leads to economic benefits but may - and to some extent already does result in advantages regarding the representation’s structure, robustness, and generalizability to different tasks. In the long run, unsupervised methods are expected to surpass their supervised counterparts due to the reduction of human intervention and the inherently more general setup that does not bias the optimization towards an objective originating from specific annotation-based signals. While major advantages of unsupervised representation learning have been recently observed in natural language processing, supervised methods still dominate in vision domains for most tasks.

In this dissertation, we contribute to the field of unsupervised (visual) representation learning from three perspectives: (i) Learning representations: We design unsupervised, backpropagation-free Convolutional Self-Organizing Neural Networks (CSNNs) that utilize self-organization- and Hebbian-based learning rules to learn convolutional kernels and masks to achieve deeper backpropagation-free models. Thereby, we observe that backpropagation-based and -free methods can suffer from an objective function mismatch between the unsupervised pretext task and the target task. This mismatch can lead to performance decreases for the target task. (ii) Evaluating representations: We build upon the widely used (non-)linear evaluation protocol to define pretext- and target-objective-independent metrics for measuring the objective function mismatch. With these metrics, we evaluate various pretext and target tasks and disclose dependencies of the objective function mismatch concerning different parts of the training and model setup. (iii) Transferring representations: We contribute CARLANE, the first 3-way sim-to-real domain adaptation benchmark for 2D lane detection. We adopt several well-known unsupervised domain adaptation methods as baselines and propose a method based on prototypical cross-domain self-supervised learning. Finally, we focus on pixel-based unsupervised domain adaptation and contribute a content-consistent unpaired image-to-image translation method that utilizes masks, global and local discriminators, and similarity sampling to mitigate content inconsistencies, as well as feature-attentive denormalization to fuse content-based statistics into the generator stream. In addition, we propose the cKVD metric to incorporate class-specific content inconsistencies into perceptual metrics for measuring translation quality.

Keywords: unsupervised representation learning, unsupervised domain adaptation, unpaired image-to-image translation, computer vision.